![【图协同过滤】对顶会论文HCCF以及LightGCL模型的一些质疑]()

【图协同过滤】对顶会论文HCCF以及LightGCL模型的一些质疑

最近在写代码复现ICLR 2023《SIMPLE YET EFFECTIVE GRAPH CONTRASTIVE LEARNING FOR RECOMMENDATION》这篇文章,结果效果一看竟然连LightGCN还不如,更离谱的是在有些数据集上竟然发生了梯度爆炸。明明数据集一样,这怎么回事呢?我一度以为是自己的代码写错了,后来检查N遍也没发现问题在哪儿,复制过去一样的forward函数,结果却差得离谱。

最后我才发现,问题出在采样函数上,代码如下([GitHub - HKUDS/LightGCL: ICLR’2023] LightGCL: Simple Yet Effective Graph Contrastive Learning for Recommendation main.py):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

| e_users = np.random.permutation(adj_norm.shape[0])[:epoch_user]

batch_no = int(np.ceil(epoch_user/batch_user))

epoch_loss = 0

epoch_loss_r = 0

epoch_loss_s = 0

for batch in tqdm(range(batch_no)):

start = batch*batch_user

end = min((batch+1)*batch_user,epoch_user)

batch_users = e_users[start:end]

# sample pos and neg

pos = []

neg = []

iids = set()

for i in range(len(batch_users)):

u = batch_users[i]

u_interact = train_csr[u].toarray()[0]

positive_items = np.random.permutation(np.where(u_interact==1)[0])

negative_items = np.random.permutation(np.where(u_interact==0)[0])

item_num = min(max_samp,len(positive_items))

positive_items = positive_items[:item_num]

negative_items = negative_items[:item_num]

pos.append(torch.LongTensor(positive_items).cuda(torch.device(device)))

neg.append(torch.LongTensor(negative_items).cuda(torch.device(device)))

iids = iids.union(set(positive_items))

iids = iids.union(set(negative_items))

iids = torch.LongTensor(list(iids)).cuda(torch.device(device))

uids = torch.LongTensor(batch_users).cuda(torch.device(device))

|

可以看到,模型首先对user打乱顺序(注意是打乱顺序),然后根据batch size(256)对user划分。然后,对于每一轮batch中的user,挑选min(max_samp, len(positive_items))个正样本和负样本。

乍一看好像没啥问题,对比下LightGCN的采样函数:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| def UniformSample_original_python(dataset):

"""

the original impliment of BPR Sampling in LightGCN

:return:

np.array

"""

total_start = time()

dataset : BasicDataset

user_num = dataset.trainDataSize

users = np.random.randint(0, dataset.n_users, user_num)

allPos = dataset.allPos

S = []

sample_time1 = 0.

sample_time2 = 0.

for i, user in enumerate(users):

start = time()

posForUser = allPos[user]

if len(posForUser) == 0:

continue

sample_time2 += time() - start

posindex = np.random.randint(0, len(posForUser))

positem = posForUser[posindex]

while True:

negitem = np.random.randint(0, dataset.m_items)

if negitem in posForUser:

continue

else:

break

S.append([user, positem, negitem])

end = time()

sample_time1 += end - start

total = time() - total_start

return np.array(S)

|

可以看到LightGCN是对user以及item同时进行了随机抽样(可重复选取),之后才根据batch size(4096)划分。我个人做实验发现这两种采样方法对模型影响巨大。前者的采样方式更容易造成过拟合。事实上作者HCCF的作者也回复我,承认采样方法存在缺陷,他认为这种缺陷是由于每个epoch过于集中关注少部分user导致的。

事实上前者的采样是一种不合理的假随机采样方法,这极易导致模型过拟合。

同样有这个问题的是SIGIR’22 的《Hypergraph Contrastive Collaborative Filtering》,采样代码如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

| def sampleTrainBatch(self, batIds, labelMat):

temLabel = labelMat[batIds].toarray()

batch = len(batIds)

temlen = batch * 2 * args.sampNum

uLocs = [None] * temlen

iLocs = [None] * temlen

cur = 0

for i in range(batch):

posset = np.reshape(np.argwhere(temLabel[i]!=0), [-1])

sampNum = min(args.sampNum, len(posset))

if sampNum == 0:

poslocs = [np.random.choice(args.item)]

neglocs = [poslocs[0]]

else:

poslocs = np.random.choice(posset, sampNum)

neglocs = negSamp(temLabel[i], sampNum, args.item)

for j in range(sampNum):

posloc = poslocs[j]

negloc = neglocs[j]

uLocs[cur] = uLocs[cur+temlen//2] = batIds[i]

iLocs[cur] = posloc

iLocs[cur+temlen//2] = negloc

cur += 1

uLocs = uLocs[:cur] + uLocs[temlen//2: temlen//2 + cur]

iLocs = iLocs[:cur] + iLocs[temlen//2: temlen//2 + cur]

return uLocs, iLocs

def trainEpoch(self):

num = args.user

sfIds = np.random.permutation(num)[:args.trnNum]

epochLoss, epochPreLoss = [0] * 2

num = len(sfIds)

steps = int(np.ceil(num / args.batch))

for i in range(steps):

st = i * args.batch

ed = min((i+1) * args.batch, num)

batIds = sfIds[st: ed]

target = [self.optimizer, self.preLoss, self.regLoss, self.loss]

feed_dict = {}

uLocs, iLocs = self.sampleTrainBatch(batIds, self.handler.trnMat)

feed_dict[self.uids] = uLocs

feed_dict[self.iids] = iLocs

feed_dict[self.keepRate] = args.keepRate

res = self.sess.run(target, feed_dict=feed_dict, options=config_pb2.RunOptions(report_tensor_allocations_upon_oom=True))

preLoss, regLoss, loss = res[1:]

epochLoss += loss

epochPreLoss += preLoss

log('Step %d/%d: loss = %.2f, regLoss = %.2f ' % (i, steps, loss, regLoss), save=False, oneline=True)

ret = dict()

ret['Loss'] = epochLoss / steps

ret['preLoss'] = epochPreLoss / steps

return ret

|

可以看到,这篇文章的采样方法和LightGCL是一样的。

我注意到无论是github上还是知乎都有人说HCCF的复现效果并不好,甚至不如LightGCN。我测LightGCL也是比不过LightGCN。于是我怀疑这两篇文章都有问题。作者在回复中给了他们baseline模型测试的链接:https://github.com/HKUDS/SSLRec

结果参数一看,差别很大(SSLRec/config/modelconf at main · HKUDS/SSLRec (github.com))

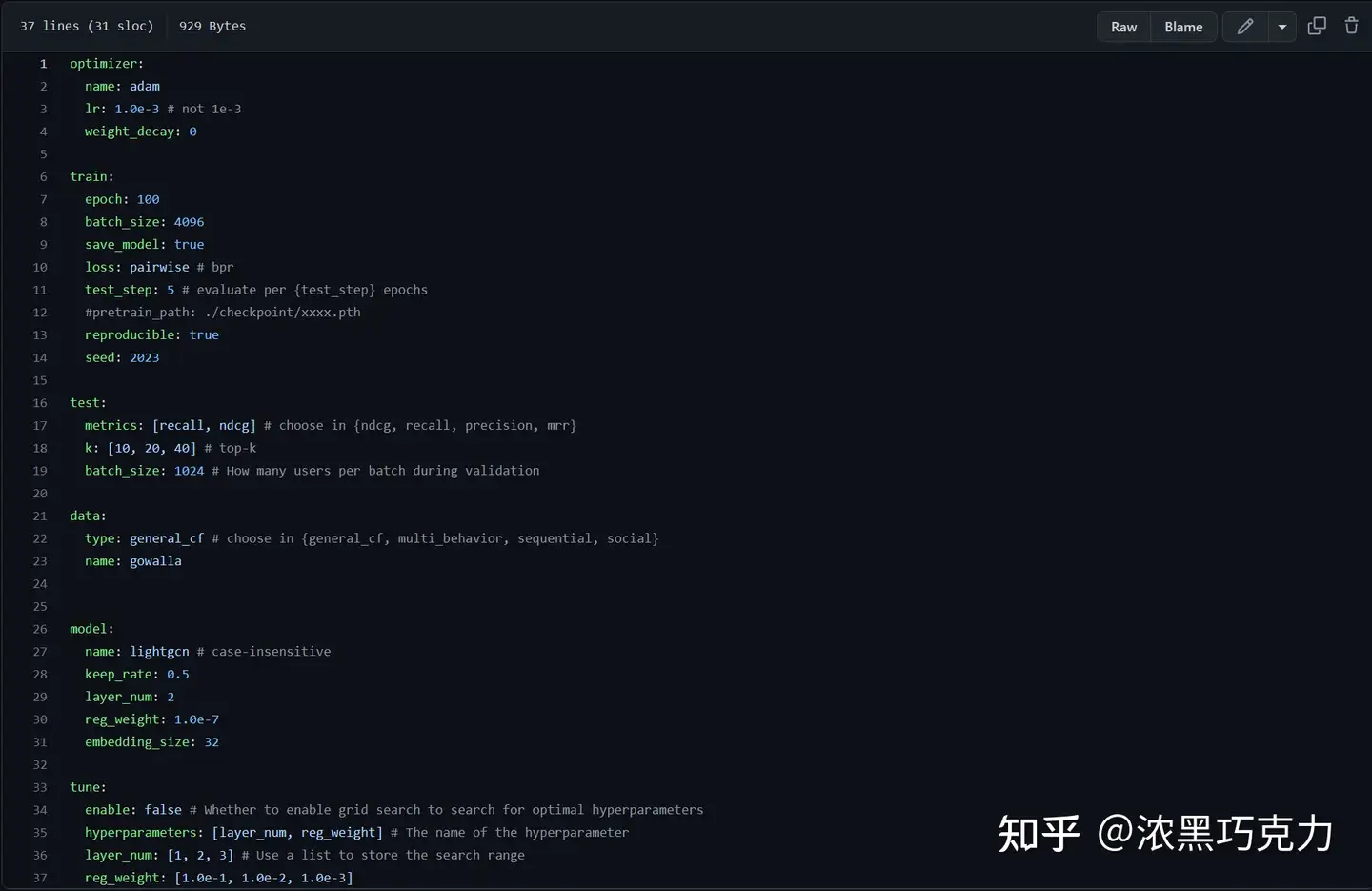

首先看一下他们对LightGCN的参数设置:

![img]()

https://github.com/HKUDS/SSLRec/blob/main/config/modelconf/lightgcn.yml

首先,embedding size就给人从64削到32,layer层数从3削到2,train epoch更是直接减到100。lightGCN这种收敛很慢的模型,一般是300epoch左右才会收敛,好家伙直接给人干到100。这baseline效果不低才离谱。

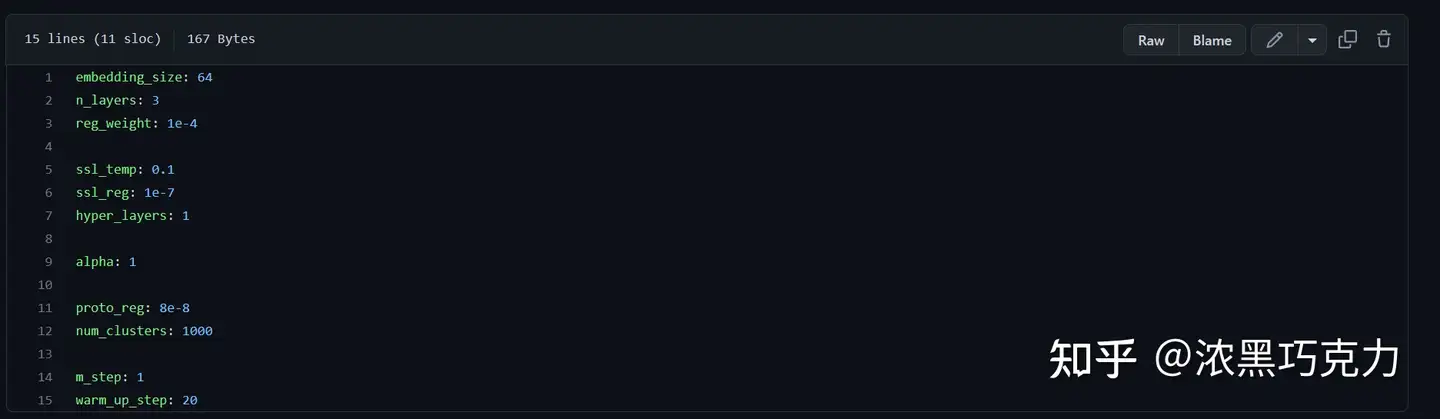

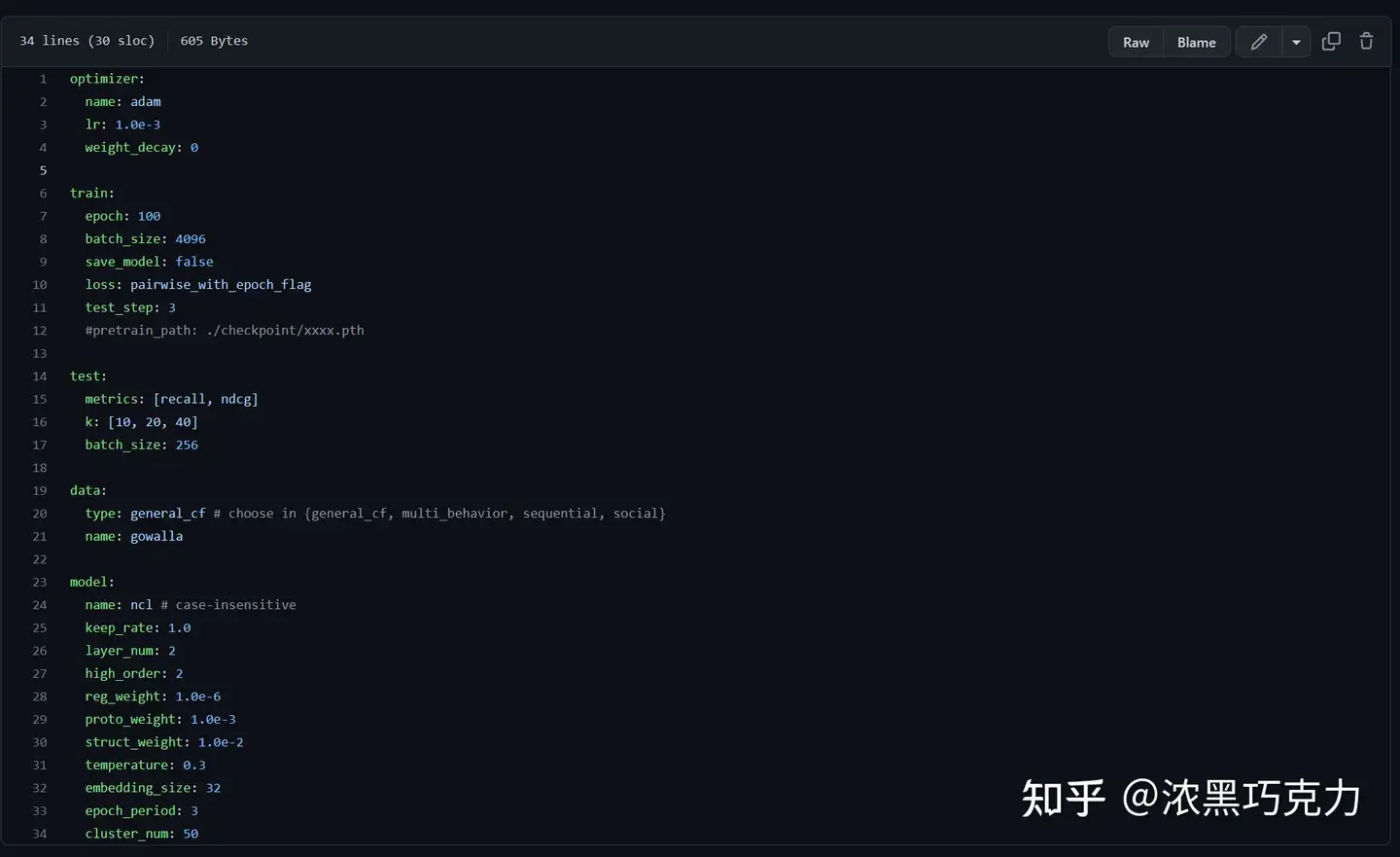

再看看他们对NCL(WWW’22 《Improving Graph Collaborative Filtering with Neighborhood-enriched Contrastive Learning》)的设置,差距就更大了。我放下原模型和他们测试的参数设置,大家可以对比一下。

![img]()

NCL源代码 https://github.com/RUCAIBox/NCL/blob/master/properties/NCL.yaml

![img]()

HCCF作者给的baseline测试设置,https://github.com/HKUDS/SSLRec/blob/main/config/modelconf/ncl.yml

参数差的最大的都5个数量级了。我针对这个问题给作者发了邮件,作者回答如下:

![img]()

作者个人隐私信息未截图

实际上LightGCL embedding=64和layer=3的情况我也测试过,效果依旧不如LightGCN。希望各位看A会论文的时候擦亮双眼,该好好复现还得好好复现,而且最好自己手写,不然掉了坑也不明白咋回事。